Generative AI for Self-Hosted Environments

Sisense Intelligence empowers analytics through advanced Generative AI capabilities. While fully integrated into Sisense Managed Cloud environments, self-hosted customers can now leverage Generative AI through an independently managed infrastructure. This enables on-premises or self-hosted environments to maintain complete control while benefiting from powerful AI-driven analytics.

Note:

This topic is specifically for setting up self-hosted Generative AI. For more background and general information about Generative AI, see Generative AI (Cloud-Linked Features) - Empowering Your Analytics Experience.

Uniqueness of Self-Hosted Environments

In self-hosted scenarios, customers directly manage their own infrastructure, including the provisioning and maintenance of both the Vector Database (MongoDB Atlas VDB) and Large Language Model (LLM) services, through Azure OpenAI or OpenAI APIs. This setup provides enhanced data sovereignty and deeper integration possibilities within customers' own IT ecosystems.

Prerequisites

For the successful use of AI capabilities in a self-hosted environment, ensure the following:

-

Sisense version L2025.2 or newer, regularly updated to align with evolving beta features

-

MongoDB Atlas Cluster (minimum recommended size: M10)

-

Public Network Access - Open web access through an IP allowlist

-

Public IP Defined and accessible for your Sisense instance

-

Monitor and manage the deployment in line with Sisense guidance

-

Accept that additional services may be required as the architecture matures

Getting Started

Before enabling Cloud-Linked Features (Generative AI), ensure you have access to your LLM key and Vector DB connection string.

-

For detailed instructions on how to set up your LLM, and supported models, see Setting Up Your LLM.

-

For detailed instructions on how to set up your VDB, see Setting Up Your Vector Database (VDB).

Enabling Cloud-Linked Features (Generative AI)

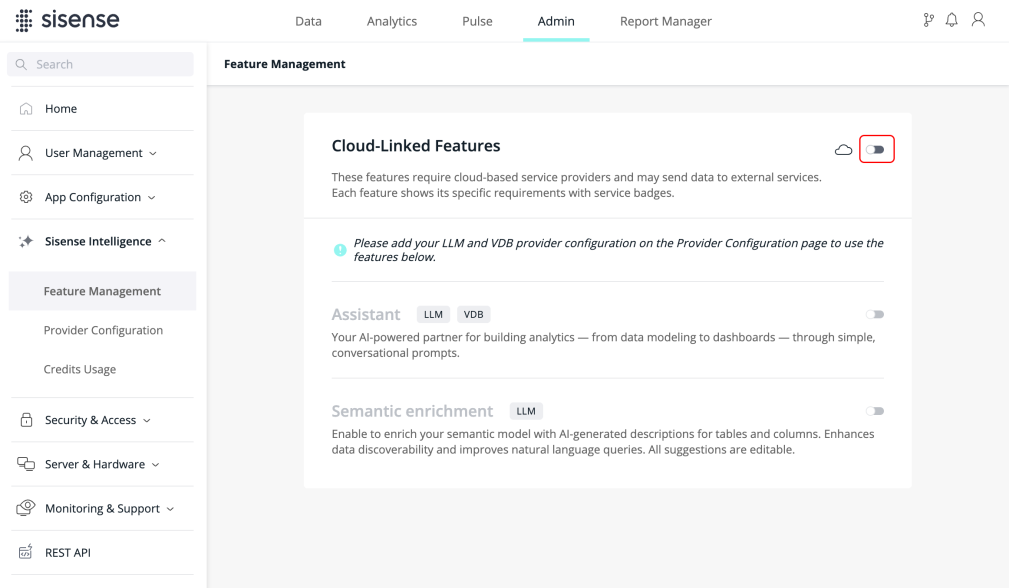

Cloud-Linked Features can be enabled (or disabled) as follows by a Sisense Administrator:

-

Search for “Sisense Intelligence” in the search bar or open the App Configuration drop-down.

-

Click Feature Management.

-

Enable Cloud-Linked Features via the toggle.

When this toggle is disabled, you will not be able to access the GenAI features that require your own LLM (Narrative is controlled separately).

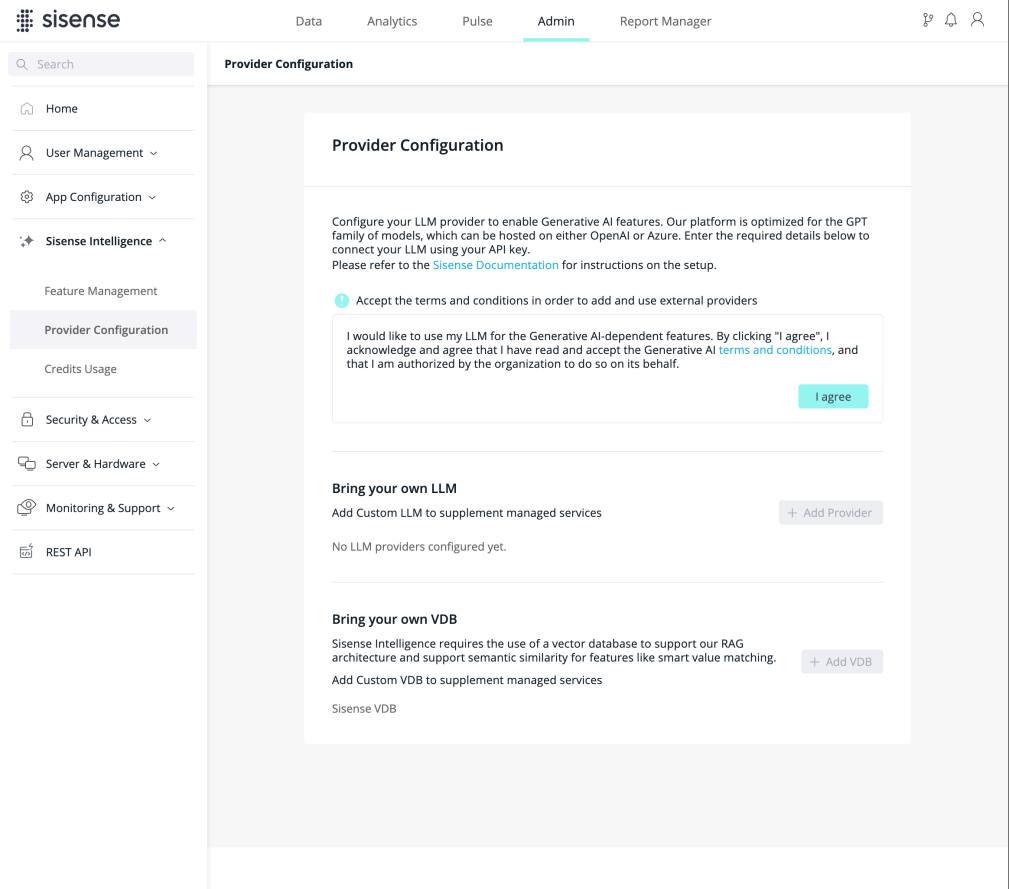

Configuring Your Provider

-

In the Admin tab, select Sisense Intelligence > Provider Configuration.

-

Click I agree to consent to the Generative AI terms and conditions.

Connecting Your LLM

To enable Generative AI features, Sisense requires integration with a supported Large Language Model (LLM). This section outlines how to connect your LLM.

-

In the Admin tab, select Sisense Intelligence > Provider Configuration (see the figure above).

-

In the Bring your own LLM section, click + Add Provider.

In order to proceed, you must configure your own LLM API key.

-

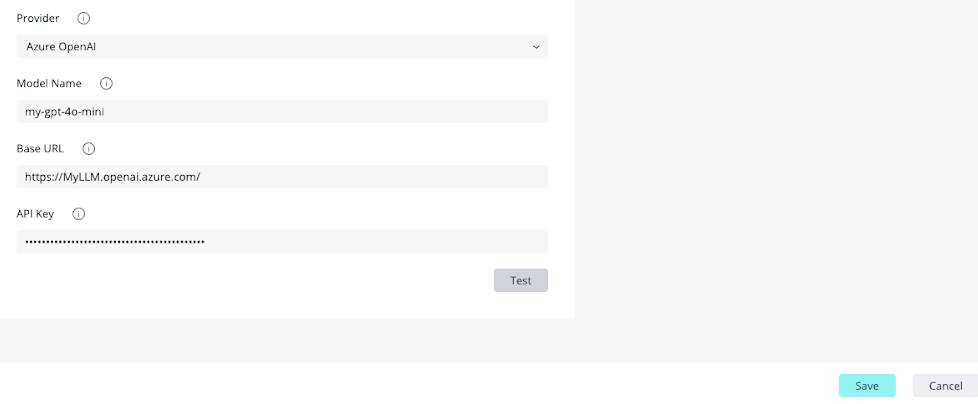

Open the LLM Provider drop-down list and select your preferred provider.

-

Once you have selected your provider, enter the configuration details, including your LLM API key, to complete the setup.

-

Azure OpenAI:

|

Name |

Description |

Example |

|

Model Name |

Model deployment name you chose during the deployment process on your LLM platform. |

my-gpt-4o-mini |

|

Base URL |

Endpoint URL of your LLM instance. |

https://MyLLM.openai.azure.com/ |

|

API Key |

API key for authenticating and authorizing your requests to the model's API. |

|

-

OpenAI:

|

Name |

Description |

Example |

|

Model Name |

Model name corresponding to your OpenAI API key (see the OpenAI supported models list). This should be the exact model identifier used for API requests. Note: Ensure that the key permissions are set to All (not Restricted/ReadOnly). See Setting Up Your LLM for more information. |

gpt-4o-mini-2024-07-18 |

|

Base URL |

Endpoint URL of your LLM instance. |

https://api.openai.com/v1/ |

|

Organization |

Only applicable if you use the OpenAI organization. If troubleshooting becomes necessary, and this field is filled in, try removing it. |

|

|

API Key |

API key for authenticating and authorizing your requests to the model's API. It is usually |

sk-proj-<the_rest_of_your_api_key> |

-

Click Test to validate your configuration.

-

Once the test is successful, click Save to apply your configuration.

Connecting your Vector Database

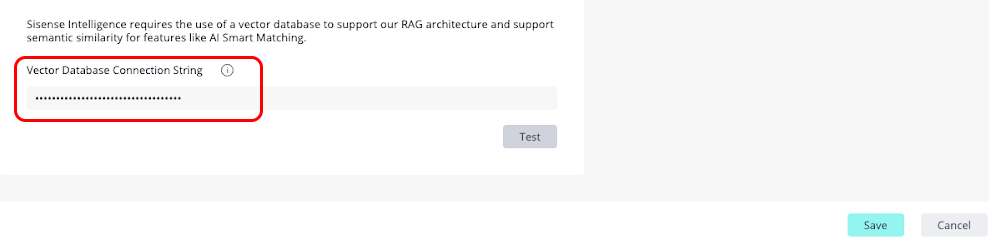

To set up your vector database connection:

-

In the Admin tab, select Sisense Intelligence > Provider Configuration (see the figure above).

-

In the Bring your own VDB section, click + Add VDB.

-

In the Vector Database Connection String field, paste the connection string for your supported vector database to connect to your vector database.

-

Click Test to validate the connection.

-

Once the test is successful, click Save to apply your configuration.

API Reference

To set up your Vector DB connection via REST API:

-

Under Admin, navigate to REST API and select version 2.0.

-

Use

POST /settings/ai/vdb/to add or update the VDB connection string used for AI settings configuration. -

Use the

POST /ai/vdb/testmethod to test the connection to your VDB.