Setting Up Your LLM

To enable Sisense Generative AI features using Large Language Models (LLMs), you must first configure and deploy an LLM provider, such as OpenAI or Azure OpenAI Service. This process involves creating and deploying a resource, selecting a supported model and region, and setting up access credentials. Once the resource is deployed, you must configure Sisense with the correct provider settings, including the model name, API key, and endpoint URL. Additionally, if you are using OpenAI, you must ensure your API key has the necessary permissions. Sisense currently supports several versions of GPT models, and it is your responsibility to ensure the correct version is configured for optimal compatibility.

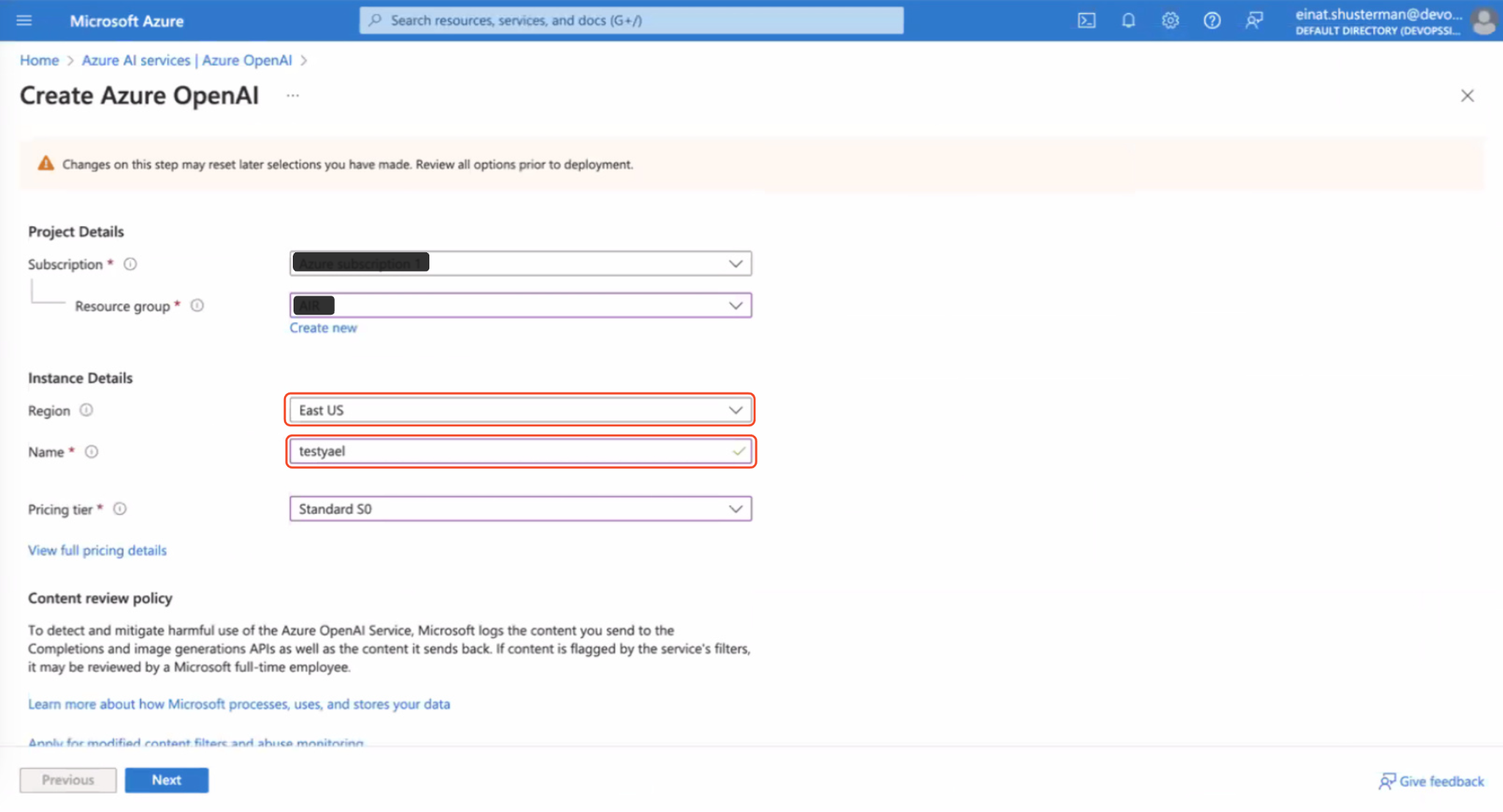

Creating a Resource

-

From the Region drop-down, select a region that supports the model version.

-

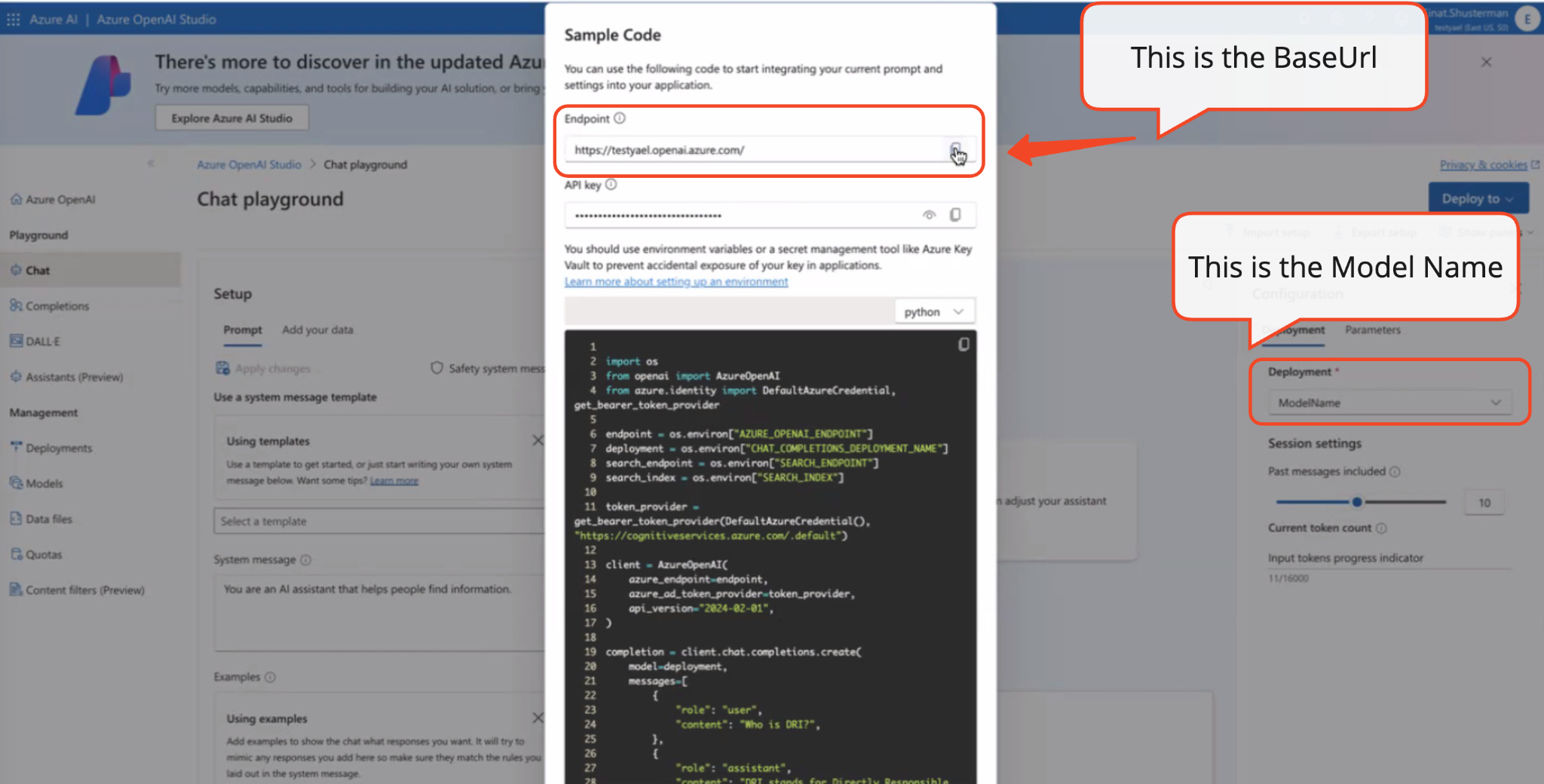

In the Name field, type an instance name. The instance name will be part of the endpoint name (Base URL).

For more information, see Create and Deploy an Azure resource and Azure OpenAI Service Models.

-

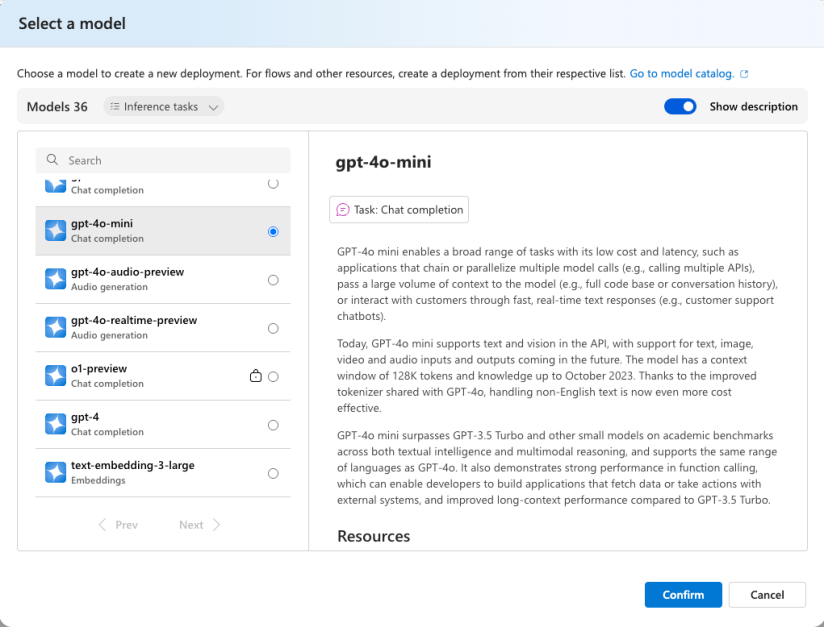

Follow the Azure documentation to deploy a base model on the resource you created. For more information, see Azure OpenAI Service Models.

-

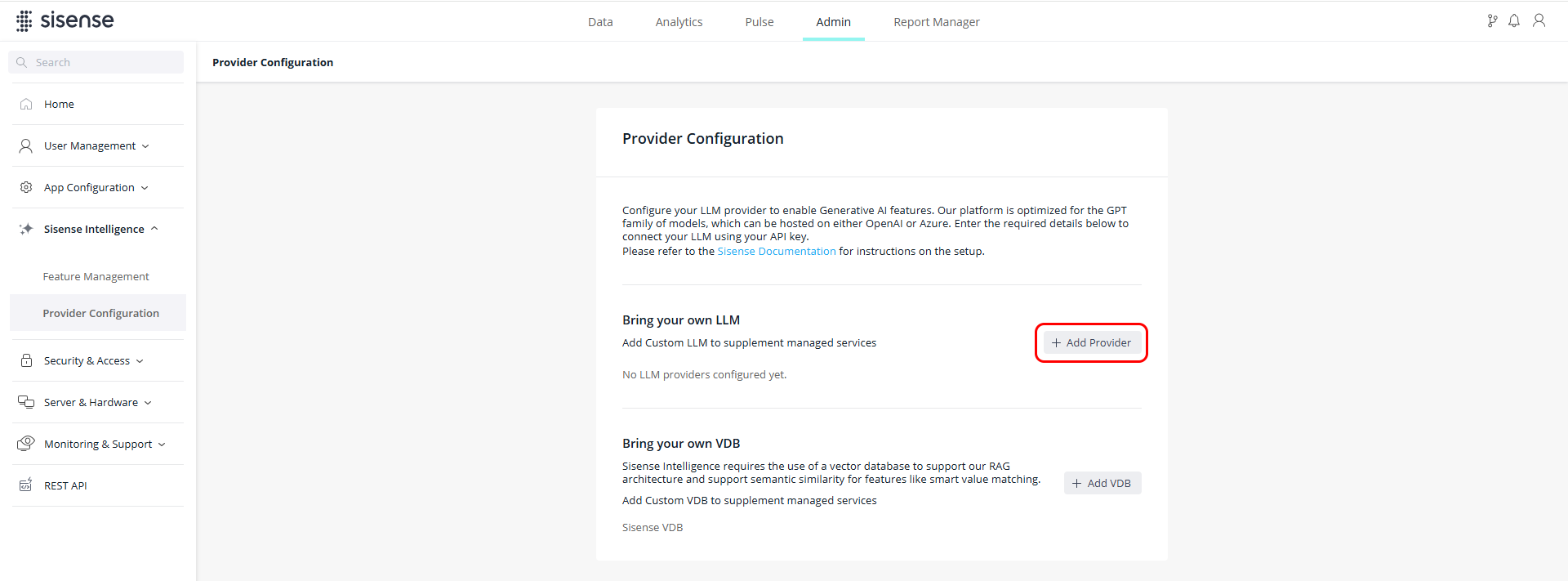

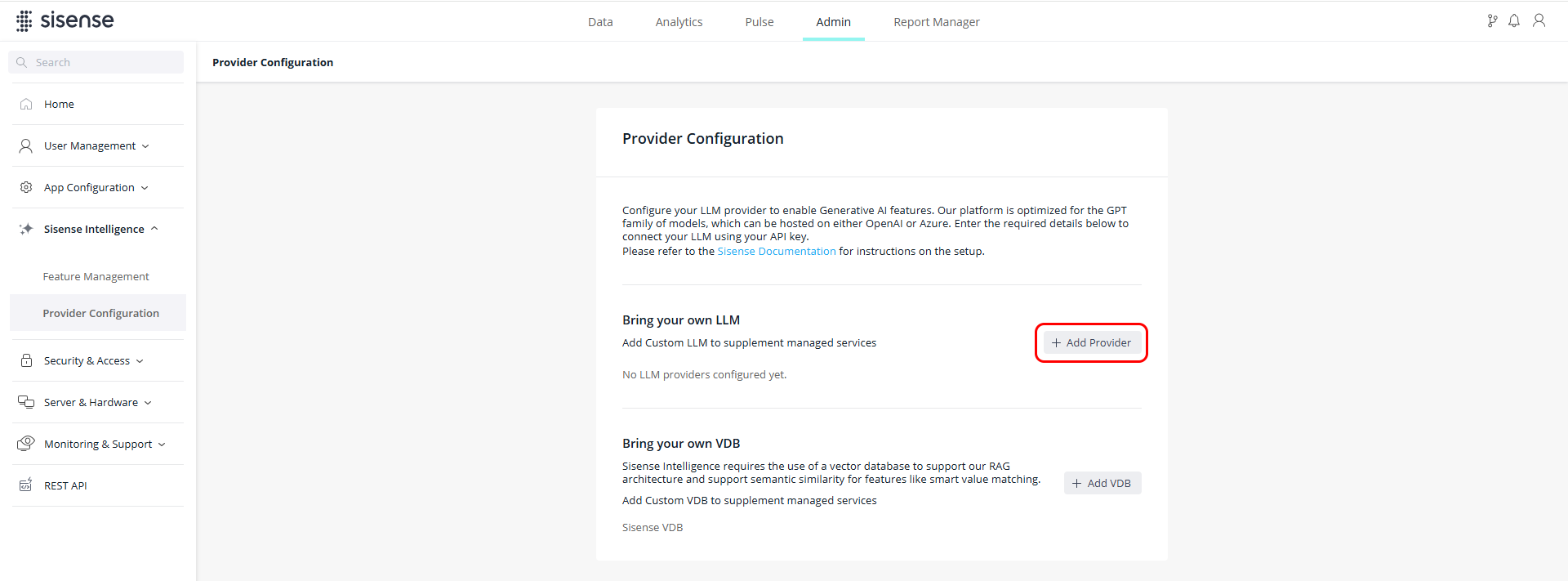

In Sisense, in the Admin tab, select Sisense Intelligence > Provider Configuration.

-

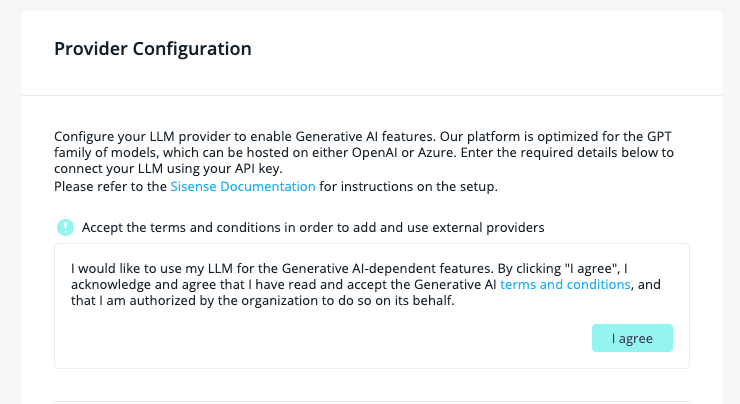

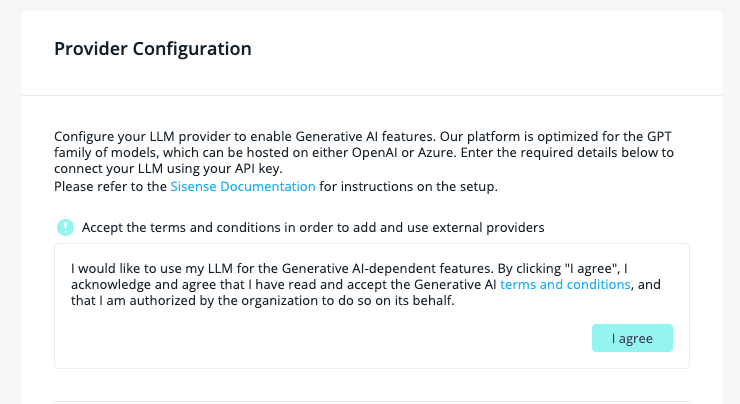

Before configuring an LLM provider for the first time, click I agree to approve the terms and conditions in order to proceed with using the cloud-linked features.

-

In the Bring your own LLM section, click + Add Provider.

-

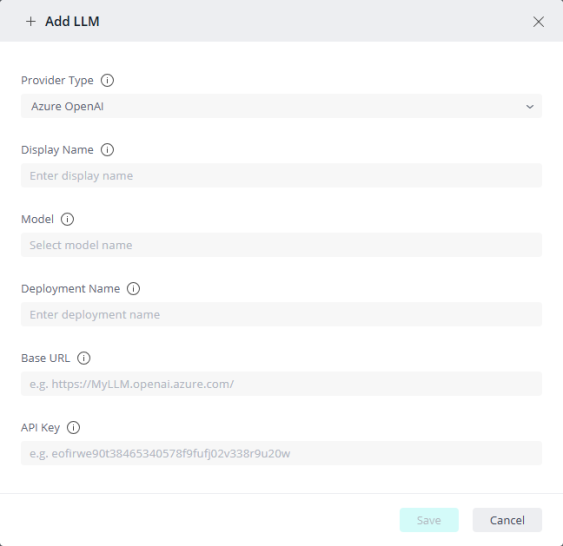

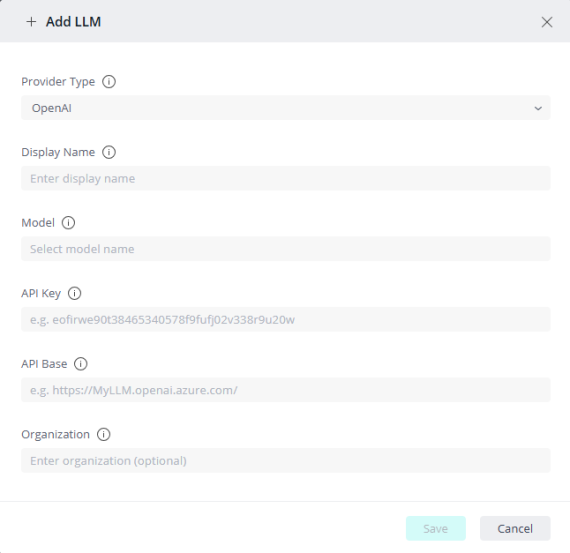

In the + Add LLM window, set the following:

-

Provider Type - Azure OpenAI

-

Display Name - The name to display for this LLM (an alias for the deployment)

-

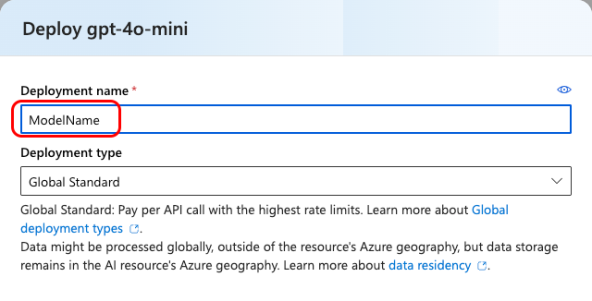

Model - The model name that you set in Azure

-

Deployment Name - A name that you provide for this deployment's instance

-

Base URL - The API base URL

-

API Key - The API key

Field

Description

Example

Model Name

Model deployment name you chose during the deployment process on your LLM platform.

my-gpt-4o-mini

Base URL

Endpoint URL of your LLM instance.

https://MyLLM.openai.azure.com/

API Key

API key for authenticating and authorizing your requests to the model's API.

<your_api_key>

-

-

-

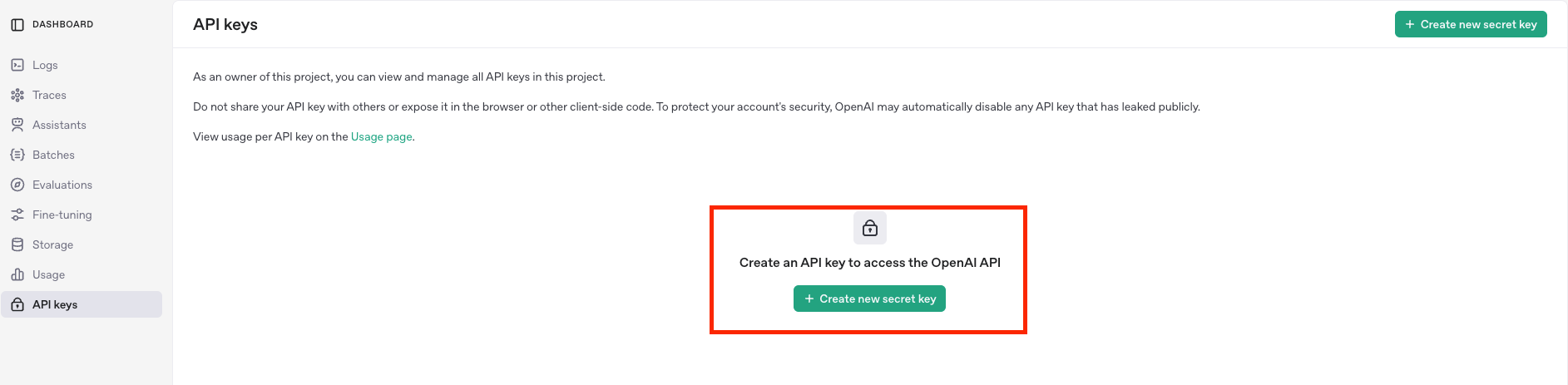

Create an API key to access the OpenAI API.

-

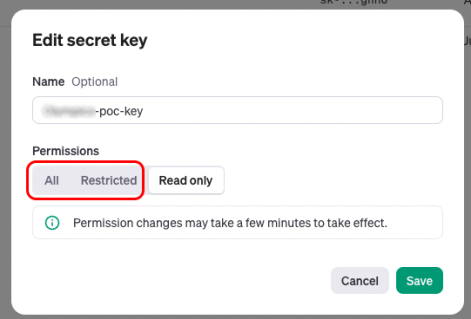

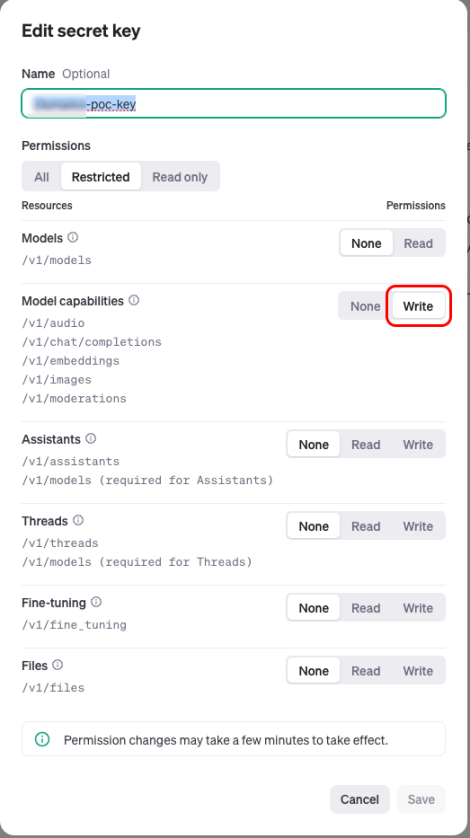

The Sisense API requires Write access for your Model capabilities. Therefore, when creating/editing your secret key, set the Permissions to either All or Restricted. If you set the permissions to "Read only" your Sisense GenAI features will not work.

If you set the Permissions to "Restricted", you must also set the Permissions for the "Model capabilities" to Write.

-

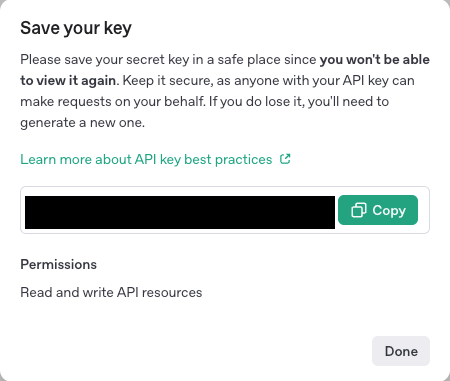

Save your key.

-

In Sisense, in the Admin tab, select Sisense Intelligence > Provider Configuration.

-

Before configuring an LLM provider for the first time, click I agree to approve the terms and conditions in order to proceed with using the cloud-linked features.

-

In the Bring your own LLM section, click + Add Provider.

-

In the + Add LLM window, set the following:

-

Provider Type - OpenAI

-

Display Name - The name to display for this LLM

-

Model - The OpenAI model name

-

API Key - The API key

-

API Base - The API base URL

-

Organization - Optional organization name for OpenAI billing purposes (if left blank, the default is used)

Field

Description

Example

Model Name

Model name corresponding to your OpenAI API key (see the OpenAI models list). This should be the exact model identifier used for API requests.

Note: Ensure that the key permissions are set to All (not Restricted/ReadOnly). See Setting Up Your LLM for more information.

gpt-4o-mini-2024-07-18

API Key

API key for authenticating and authorizing your requests to the model's API.

<your_api_key>

-

Once you have configured your LLM, click Test to validate your configuration. Upon success, click Save.

Supported LLM Providers and Model Versions

Sisense currently supports OpenAI foundation models hosted either directly through OpenAI or through Azure OpenAI Services.

From time to time, additional supported model versions may be added after quality verifications.

It is your responsibility to manage your model version.

|

Model |

Version |

Azure OpenAI |

OpenAI |

Supported by assistant |

|

GPT-4.1 |

gpt-4.1-0414 |

✔ |

✔ |

✔ Recommended |

|

GPT-4o |

gpt-4o-1120 |

✔ |

✔ |

✔ |

|

GPT-4.1-mini |

gpt-4.1-0414 |

✔ |

✔ |

Partial support. This version is not recommended for the assistant, as it may provide an inconsistent experience, and some functionality is not reliably performant when using mini. |

|

GPT-4o-mini |

gpt-4o-mini-0718 |

✔ |

✔ |

Partial support. This version is not recommended for the assistant, as it may provide an inconsistent experience, and some functionality is not reliably performant when using mini. |

|

GPT-3.5 |

gpt-35-turbo-0125 |

✔ |

✔ |

X |