Troubleshooting Build Memory Issues

Out-of-Memory Build Issues

When building an ElastiCube with connectors that use the JVM framework, you might receive an "out of memory" error. This could be a result of your computer not having enough memory allocated to run all the necessary processes, or your computer might need more RAM. For more information about minimum requirements, see Minimum Requirements for Sisense in Linux Environments.

Information about other build-related memory issues can be found on the Community site, such as Managing Linux Build Service Settings for Optimal Performance and Stability.

Allocating Memory According to Connector Deployment Type

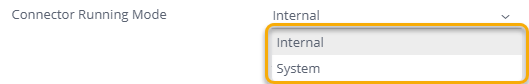

The process for changing the memory allocations for your connectors depends on the connector deployment type as found in the Data Group settings.

To see which connector deployment type your system is using:

-

Open the Admin tab and search for and select Data Groups (located under Server & Hardware) or go to

http://<IP address:Port>/app/settings/datagroups. -

Click the Edit icon of the Data Group. The Edit Data Group window is displayed.

-

Note the Connector Running Mode (i.e., the connector deployment type) to determine which instructions to follow below.

Connector deployment types (“Connector Running Mode”):

-

Internal - This connector deployment type supports both:

-

Connectors running under the new connectors framework. See New Connectors Framework for more information. The new connectors framework (and its connectors) are the default beginning with release 8.2.4.

-

Older JVM connectors that are deployed in the Kubernetes

connectorspod.)

-

-

System - This connector deployment type only supports the older JVM connectors that are deployed in the Kubernetes

connectorspod.

Memory allocation for JVM connectors deployed in Kubernetes

To allocate more memory for the (older) JVM connectors you must increase the amount of memory allocated for both the:

-

JVM of the connectors

-

Kubernetes connectors pod where the

connectorsJVM is running

JVM settings:

-

Open Admin, search for and select System Management which is located under Server & Hardware, and click File Management or go to

http://<IP address:Port>/app/explore. -

Navigate to the

connectorsfolder and open theconfiguration.jsonfile.Note:

The

configuration.jsonfile will not be found when you are using the new connectors framework and no JVM connectors are deployed. (Which should only be possible for the Internal connector deployment type.) -

In the line that starts with "jvmParameters:", look for a parameter that starts with '-Xmx', such as '-Xmx2G' or '-Xmx500M'. This parameter indicates the maximal heap size of the JVM.

For example:

"jvmParameters": ["-Xmx8g", "-server", "-Dfile.encoding=UTF-8"],When this parameter does not exist, the heap size is set by default to one-third of the computer's RAM. To modify the default size, add the parameter from this example into your

configuration.jsonfile and modify the-Xmxvalue according to your needs.For additional information about the -Xmx parameter, see What are the -Xms and -Xmx parameters when starting JVM?.

Pod settings:

-

The JVM runs inside a pod, so the pod's memory limit should be updated to match or exceed the updated JVM Xmx memory limit. To change the pod's memory limit:

Edit the deployment settings of the

connectorspod:kubectl -n sisense edit deployment connectorsIn the Limits section, set the Memory value to at least the same size as the JVM memory.

-

Restart the JVM service.

kubectl -n sisense delete pod -l app=connectors -

Verify that the JVM parameters you specified were accepted. The JVM parameter values are in the Command Line column for the Java processes.

Use these Kubernetes CLI commands to check your memory allocation configuration changes:

-

Run this command to print the metadata for the connector processes in the

connectorspod:kubectl exec $(kubectl -n sisense get pod -l app=connectors -o custom-columns=":.metadata.name")-n sisense -- ps -eo args --no-headers | grep ContainerLauncherApp | awk '{print $10 " " $2}' -

Run this command to check the older JVM connectors in the Kubernetes

connectorspod (container) during a build:kubectl exec $(kubectl get po -l mode=build -o custom-columns=":.metadata.name,:.spec.containers[1].name" --no-headers -n sisense| awk '{print $1" -c " $2}') -n sisense -- ps -eo args --no-headers | grep ContainerLauncherApp | awk '{print $10 " " $2}' -

Check that all the connectors are running and that the JVM value is correct.

High Memory Consumption Due to Many Active Connectors

If you have many active JVM connectors, (and you are not using the new connectors framework) you might experience memory consumption issues. To reduce the number of active JVM connectors, disable the connectors you are not using.

Note:

This issue was resolved by the new connectors framework. See New Connectors Framework for more information.

To disable unused connectors:

-

Open the

usedConnectors.jsonfile in theconnectorsfolder.Note:

The

usedConnectors.jsonfile will not be found when you are using the new connectors framework and no JVM connectors are deployed. This should only be possible for the Internal connector deployment type. (See Allocating Memory According to Connector Deployment Type for more information.) -

Add or remove connectors to the

usedConnectors.jsonfile. -

Set the Custom connectors field to TRUE:

-

TRUE - Only connectors in the "displayConnectors" are active. (Only active connectors consume memory.)

-

FALSE - All connectors are active.

-

Restart the

connectorspod:kubectl -n sisense delete pod -l app=connectors